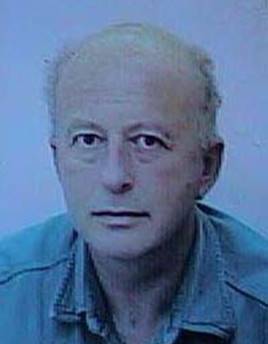

Witold

LITWIN

ACM-Fellow

2001 (1st ever in France)

Position :

Professor at University Paris 9

Dauphine

Emeritus since Sept. 1, 2014

Content that follows might not be up to date. Sorry. Life

is flying.

Database

Courses (taught until the

retirement)

Director of CERIA (until

2009):

CENTRE DES ETUDES ET

DE RECHERCHES EN INFORMATIQUE APPLIQUEE

Member of LAMSADE (present)

Short Bio (pointing also towards full CV)

ACM-Fellow 2001 Award

Ceremony (Amateur Video) Play

(DSL

or Local Net & Windows Media Player and Real One Player Recommended; click

on Play button without waiting for the full file download. That

one may take some minutes otherwise)

ACM Special Event to Honor Leading European Computer Scientists. Oct 8, 2009, Paris.

Amateur video features the main part of the ceremony. Wmv or svf or, or flv, allow a minute or so before the streaming begins).

Prof Dame Wendy Hall, ACM President, in green jacket, greets European

Recipients of major research-oriented ACM Awards. ACM VP (sorry not to retain

the name) calls us by name and the original Award Citation. Sir Tony Hoare,

called, regretfully, before the video begins, for his 1980 Turing Award, starts

the “pas de deux”.

The two photos, 1 and 2, are derived from

the video and feature eight from nine honored European ACM Fellows. The picture misses the last one called in for

technical reasons. I possibly fix it in near future. Finally, guess who folks

on the final enclosed souvenirs are, P1, P2, P3, especially the

blond lady with the (Andy Warhol’s to respond to some inquires) scarf.

Address

Prof. Witold LITWIN

Lamsade

Université Paris Dauphine

Pl. du Mal. de

Lattre de Tassigny

75016 Paris FRANCE

Witold.litwin (add @ followed by dauphine.fr)

My Virtual Computer History Museum

Unsung

Pioneers of Distributed Data Universe which We Take for Granted Today

Open

to public 24/7. Enter Here

Latest posts

Witold Litwin. SIR

SQL for Logical Navigation and Calculated Attribute Free Queries to Base

Tables. In Proc. of 27th International Conference on Enterprise Information

Systems, ICEIS 2025, Vol. 1, 191-201, Scitepress

(publ.). Presentation slides here.

Witold Litwin. SIR

SQL for Logical Navigation and Calculated Attribute Free Queries to Base

Tables. Lamsade Res. Rep., April 15, 2024, 15p, pdf. Preliminary version of the one published at ICEIS

2025.

The

report expands “Manifesto for SIR SQL” with figures, additional details and

examples.

Witold

Litwin. Manifesto for SIR SQL. 9th Int. Conf. On

Information And Education Innovations (ICIEI 2024).

April 12-14, 2024, 5p, ACM Digital Library, ACM ISBN 979-8-4007-1640-9/24/04 https://doi.org/10.1145/3664934.3664948

Pdf protected by on-demand password, to respect ACM

copyrights, here

.

Witold

Litwin. Prototype Front-End for Stored and Inherited Relations On SQLite3:Demo for Supplier-Part Database. Aug. 15,

2023, 1p, pdf.

Witold Litwin. Curious

Case of LIST Aggregate Function. Lamsade Research Memo. July 3, 2022, 3p. pdf.

We present just discovered curious disguises of LIST

aggregate function we proposed almost 20 years ago. The proposal seemed ignored

by the mainstream DBSs. But, it appears now that all adopted it. We were not

aware, since, strangely, DBSs used all function names different from ours. Also

surprisingly, we could not locate any publicly available research article or

technical report about any of those offerings. We could only see the grey

literature: reference manuals, blogs, tutorials, forums…

Witold Litwin. Stored and Inherited Relations with PKN Foreign Keys. 26th European Conference on Advances in Databases and (Information

Systems (ADBIS 22), Megadata Wokshop,

Torino, Sept. 5-8. 12p. Springer (publ.).

A stored and inherited

relation (SIR) is a 1NF stored relation enlarged with inherited attributes

(IAs). SIRs are the only known view-savers without any denormalization for

typically logical navigation free (LNF) and calculated attribute free (CAF)

queries. Recall that LN means joins among base tables, while CAs serve as the

virtual ones do at popular DBSs, but can be as general as in a view. E.g., with

aggregate functions or sub-queries or value expressions sourced in base tables

other than the one with the CA. Recall also that the usual benefit of LNF and

CAF queries is substantially lesser procedurality. We first show how the usual

schemes of stored tables with so called PKN foreign keys, define also

implicitly SIRs with IAs for LNF queries. We then discuss SIRs with such keys

and with explicit IAs, CAs for CAF queries in particular. We show how one can

define any such SIR less procedurally than till now. We then show that making

any popular relational DBSs SIR-enabled should be simple. Preexisting

applications could remain not affected. Alternatively, DBA could easily alter

any so it profits from LNF and CAF queries as well, as any new

applications. We postulate major DBSs

becoming SIR-enabled “better sooner than later”. To make LNF and CAF queries

standard, for the benefit of millions of SQL users.

Witold Litwin. Natural Stored and Inherited Relations. ICTH 2021, Procedia

Computer Science, ScienceDirect.com, Elsevier (publ.), 8p. Preprint: pdf. Powerpoint presentation:

pptx.

Earlier version: Lamsade Research e-report, July 25, 2020, 6p. pdf

A stored and inherited relation (SIR) is a 1NF

stored relation augmented with inherited attributes (IAs). We show that one may

consider every usual scheme of a stored normalized relation R with foreign keys

as implicitly defining a natural

SIR R. For every foreign key, all the attributes of the referenced

relation other than the referenced key become IAs of SIR R. Each IA

inherits the name and every value of the referenced attribute where foreign key

value equals a referenced one. IAs cannot introduce any normalization

anomalies, while a typical select-project-join query to a stored (only)

relation R at present, becomes select-project only towards natural

SIR R. In popular terms, such queries become logical navigation free

(LNF), without any additional data definition effort from the database

administrator. We show how discussed stored relation schemes define natural

SIRs. We show also that making a typical relational DBS SIR-enabled should be

simple. Preexisting relations with foreign keys may be easily converted to

SIRs, preserving existing applications, while providing for LNF queries for new

ones. We conclude that since inception of the model, relational DB schemes have

not been read as they should be or, at least, could be, i.e., as defining

natural SIRs. Logical navigation within otherwise simple SQL queries bothered

generations uselessly. We claim that every major DBS should become SIR-enabled

“better sooner than later”.

Witold Litwin. Stored

and Inherited Relations with Foreign Keys for Logical Navigation Free and

Calculated Attribute Free SQL Queries to Normalized Base Tables. Lamsade Technical e-Report, June 16, 2020. pdf

Abstract — A stored and inherited relation (SIR) is a

1NF stored relation enlarged with inherited attributes (IAs). The latter make

SIRs as normalized base tables the only known view-savers for the logical navigation free (LNF) and calculated attributes free (CAF) queries

to such tables, [1]-[5]. Recall that LNF means no equijoins between foreign and

referenced keys, while CAF queries avoid possibly complex value expressions,

e.g., with aggregate functions and sub-queries. We now show the typical at

present schemes of base tables with foreign keys may define instead SIRs termed

natural. The latter provide for LNF

queries, unlike the same scheme tables at present. Next, we show that one may

enlarge such schemes with useful IAs, CAs especially, getting CAF queries as

bonus. Below, we first recall SIRs basics. Then, we discuss the natural SIRs.

Next, we analyze the enlarged schemes. Then, we generalize the relational

design to SIRs, the concept of NFs especially. Afterwards, we show that

providing SIRs over a popular relational DBS, e.g., SQLite3, should be simple.

Preexisting applications could remain not affected, while new applications

could profit from LNF and CAF queries.

We conclude that major relational DBS should become SIR-enabled “better

sooner than later”. LNF and CAF query will become a standard then, at last,

simplifying the life of, likely, millions of SQL clients.

Sushil Jajodia, Witold

Litwin, Thomas Schwarz. Trusted Cloud SQL DBS with On-the-fly AES Decryption/Encryption. Lamsade Technical Report, August 15, 2016. pdf. Revised version published

at 3rd Intl. Workshop on Privacy and Security of Big Data (PSBD 2016), at 2016 IEEE Intl. Conf. on Big Data Washington DC.

Narrated talk ppt.

A Trusted Cloud Database System manages client-side encrypted cloud

DBs. Queries may include encryption keys. The DBS

decrypts/encrypts the data on-the-fly at the cloud. Plaintext is only in

protected run-time variables. Stored data are by default probabilistically

encrypted through AES. Any SQL queries are feasible, with negligible processing

overhead and practical storage overhead. This is a major advance over the

current alternative research proposals. We detail capabilities of a trusted

DBS. We adapt SQL to client-side key management. Queries may remain usually

almost as non-procedural as now. A prototype implementation appears easy.

Notice on Dec. 23,

2019. Browse through the recent abundant MS Docs on Azure

SQL Server with secure enclaves. Start perhaps

with the September 14, 2017 CTO’s framework statement: “Introducing Azure

confidential computing”, here.

In our humble opinion, no need to be rocket scientist to see that Azure SQL

Server is straight in the footsteps of our paper. Up to now, we could not

locate nevertheless any MS paper with the reference to. Old-time Academia

customs would imply so. If you spot one, forward the info, please.

Witold Litwin. Stored

and Inherited Relations. Lamsade Technical Report,

Mars, 2016. Revised, 33p, March 2017 snapshot archived in

Computing Research Repository (CoRR), pdf.

10p revised conference version untitled “SQL for Stored and Inherited

Relations” published at ICEIS 2019, pdf , pptx.

The universally applied Codd’s

relational (data) model has two constructs: a stored relation, with stored

attributes only and a view that has only the inherited ones. In 1992, we

proposed an additional construct, mixing both types of attributes. Examples

showed it attractive. However, no further work followed. We now come back to

our proposal in depth. We show that stored relations expanded with inherited attributes

may become more faithful to the reality. Less procedural SQL queries follow,

free of or with reduced logical navigation and free of selected value

expressions. We propose clauses for Create Table creating inherited attributes

among the stored ones. We show these clauses always less procedural than the

Create View statements providing for the same simpler queries. The views may be

also more procedural to maintain. We adapt Alter Table statement to our

relations. We show how our construct subsumes two partial helpers with less

procedural SQL queries which already made it to popular DBSs. We illustrate our

proposals with numerous examples, rooted in the “biblical” Supplier-Part

database. We show how to implement our relations on popular DBSs with negligible

operational overhead. We conclude that relational DBSs should accommodate our

proposal better sooner than later and we discuss further research.

Witold Litwin. Manifesto for Improved Foundations of Relational Model. Lamsade Res. Note, May 2019, 3p, pdf. Dec. 16, 2019 snapshot archived in Computing Research Repository (CoRR), pdf.

Extended version

presented at 6th Int. Symp. on

Emerging Information, communication and

Networks (EICN-2019). Published in Procedia Computer

Science, 160, (2019), 624-628, Elsevier, (publ.). Talk

slides: pptx (updated for seminars in March 2020 at (1)

SRC-UCSC, Santa Cruz, CA (2) IBM Almaden Reseach Center, CA.)

Normalized relations extended with inherited

attributes can be more faithful to reality and support logical navigation free

queries, properties available at present only through specific views. Adding

inherited attributes can be nonetheless always less procedural than to define

any such views. Present schemes should even suffice for relations with foreign

keys. Implementing extended relations on popular DBSs appears also simple.

Relational model foundations should evolve accordingly, for benefit of likely

millions of DBAs, clients, developers….

Sushil Jajodia, Witold

Litwin, Thomas Schwarz. On-the fly AES Decryption/Encryption for Cloud SQL Databases. Lamsade Technical Report, June 15, 2015 & ACM

Repository. pdf

We propose the client-side AES256 encryption for a cloud

SQL DB. Column ciphertext may be deterministic or

probabilistic. We trust the cloud DBMS for security of its run-time variables,

e.g., through a moving target defense. The client may send AES key(s) with the

query. These serve the on-the-fly decryption of selected ciphertext

into plaintext for query evaluation. The DBMS clears the key(s) and the

plaintext at the query end at latest. It may deliver ciphertext

to decryption enabled clients or plaintext otherwise, e.g., to

browsers/navigators. The scheme functionally offers to a cloud DBMS

capabilities of a plaintext SQL DBMS. AES processing overhead appears

negligible for a modern CPU, e.g., a popular Intel I5. The deterministic

encryption may have no storage overhead. The probabilistic one doubles the DB

storage. The scheme seems the first generally practical for an outsourced

encrypted SQL DB. An implementation sufficing to practice with appears easy. An

existing cloud SQL DBMS with UDF support should do.

Short version to appear at DEXA 2016. Narrated slides ppt . Download may take couple of min. on ADSL.

Recent Research Activities

1. Clouds and Security

We worked on this topic since 2009. At the Center for Security

of Information Systems (CSIS) at GMU and at Dauphine. With

Prof. Sushil Jajodia, Director of CSIS & with Thomas Schwarz, Prof. at SCU

then. Lamsade site indexes the publications.

The above presented research is the current goal. It follows our 2014-15 work

proposing a new homomorphic encryption scheme for

queries with SQL value expressions for a cloud DB. We believe that scheme the 1st

practical one for its goal. See our article in Dexa-2015 proceedings

(Springer).

Before, we worked on cloud data privacy and

(encryption) key recovery using a cloud as a new tool. The former direction led

to a method for controlled access to a scalable collection of data records.

E.g., documents, like SharePoint does. We believe, however, our method the

fastest at its time and likely still. The key recovery issue led to two

proposals for key copy management. First one partitions the copy into fragments

stored on a few randomly chosen cloud nodes among many. A legitimate user may

easily recover the key. The attacker should illegally gain access to almost all

of the candidate nodes. The event that appears highly unlikely in practice.

The other method trusts a key copy to an

escrow agent, encrypted by the key owner in a specific way. The particularity

is that the owner may define the recovery complexity at will. The idea is that

to recover the key in some practical time, e.g., 1 minute, may require then a

large cloud, say 1000 nodes. The cloud uses a scalable

distributed virtual data structure, see the next section. The complexity should

inversely depend on the trust into the escrow service. The goal is to make an

illegal recovery, e.g., by an insider, expensive and traceable enough to

usually deter attacks. A legitimate recovery may cost the same. But, can still

be preferred to the disaster of the key loss. A legitimate client may also know

a secret info defined by the key owner and unknown to the escrow service,

called discount. A discount makes the recovery faster or the cloud smaller. We

feel the method practical, e.g., as a Web service. One could pay there only

when the recovery is requested or as an insurance etc.

1.Scalable Distributed

Virtual Data Structures

Research on key recovery brought us around 2012 to

the proposal of a new type of data structures. We called them scalable distributed virtual data structures

(SDVSs). Like a traditional data structure, an SDVS is a collection of (key,

non-key) structured records. Key values are 0,1..M-1

for some large M. But, these records are not stored. Instead, they are virtual. A search dynamically creates any virtual record and

matches it against the search criteria. A positive match retains the record for

further processing. A negative match drops any just-created one.

As the

name suggests, the next property of an SDVS is to typically use a large cloud.

The final distinguish property is that every SDVS search carries a user-defined

time limit, say R over the search time at every of its cloud nodes. The overall

worst search time is then R usually as well. To meet R, a node that gets a

search over some records, first estimates its throughput. If the calculus shows

the node unable to meet R, it splits the key set. The split sends a part of the

set, say P, basically a half, to another node, for processing of the virtual

records with keys within P. The partitioning is recursive till every node

estimates itself able to respects R. Presently, the hash partitioning and the

range partitioning of virtual records have been studied.

Use of

SDVSs allows the user defined worst-case time limit on the requested key

recovery. Smaller R usually requires a larger cloud, evidently. The cloud can

be heterogeneous, i.e. with nodes having different throughputs. One may also

tune the cloud size as well as the average R. One may finally make SDVS

reliable, i.e., protected against node failures. See the papers for more.

Beyond key recovery, SDVSs constitute a new tool for

the operational research. They appear 1st to allow some traditionally hard

calculations to be completed within the user-defined practical time limits. The

condition is the availability of a sufficiently large cloud, to spread the

calculations enough. One may cite the knapsack problems, the travelling

salesman problems, the combinatorial enumerations, e.g., of all the

permutations… It is an open research field at present.

2.Knapsack Joins

We have proposed this concept with my dear co-author in my BDA 2010

invited talk, in Toulouse, The intention was to

facilitate the knapsack problems calculations over relational DBs. A k-way knapsack q-join over numerical columns r1…rk selects every tuple (t1…tk) with

t1+…+tk ≤ C, where C is a user defined constant. A practical query may

also carry the Order By and Top K clauses. For instance, someone may search for

ten sets of five real estate properties to buy from a listing of a thousand

with the cumulated price tag as close as possible but not over 1M$. We showed

ways to make knapsack joins more efficient than through the naïve nested loop.

That one was and possibly still is, the only technique used by DBMSs at the

time of writing. One may easily see that our example is then yet only a dream.

My talk opened the conference. A 2nd invited talk closed it. It was

by a nice researcher from Yahoo, New York. Amazingly, it turned out that she

was investigating similar issues. The goal of her work was indeed a

hypothetical Yahoo assistant for folks searching for a collection of IPhone’s

accessories fitting best a given budget. I did not hear since about anything

commercial, at Yahoo or elsewhere that would follow up her proposals or ours.

3.Scalable Distributed Data Structures (SDDSs)

SDDSs are a class of data structures we have proposed

with M-A Neimat and D. Schneider at HP Labs, Palo

Alto, in 1992. This concept and the subsequent outcomes were apparently one “leg”

of my ACM Fellow Award. SDDSs were intended for multicomputers.

This naming was coined by A. Tanebaum, in his famous

book. It designated for networks of share-nothing processors (nodes) linked

through high-speed network, wide-area or local, or a bus. We published the 1st

SDDS termed LH* at ACM-SIGMOD 93. Soon after, HP Labs patented it. An SDDS can

span over potentially any number of nodes, scaling up or down, i.e., on more or

less nodes, transparently for the application. SDDS files can reach sizes and

access performance impossible for more traditional file structures. For best

access performance, an SDDS file should reside for processing in the

distributed RAM. Performance analysis has demonstrated experimentally the

search speed of up to 300 times faster than to a local (magnetic) disk. The

capabilities of SDDSs open new perspectives for many applications. Today, in

2016, many SDDSs have been proposed. Some are in everyday use by billions (see

below). There are many papers on the subject with thousands of references to

(see Google). SDDSs are especially investigated and now operationally built as

the infrastructure for P2P systems, grids and clouds: public, private,

hybrid.... CERIA prototypes focused mainly on so-called today RAM Clouds and NoSQL (cloud) databases, except for SD-SQL Server (Scalable

Distributed SQL Server), the 1st ever prototype Scalable Dsitributed

relational DBMS. Google for the research papers or see the one below. See also

the page of S. Soror or her video demo. The RAM Cloud orientation is in part

due to 1992 visionary lecture at Berkeley by Jim Gray. See my MS Redmond 2001

talk below or my SDDS class (in French) where one (almost) original slide of

Jim Gray on “distance to data” is present. Notice also the recent RAMCloud project headed by John Ousterhout (Stanford),

although its papers, surprisingly, do not cite any of ours. For further info on

SDDSs – see our classes Cloud Databases.

Research on SDDSs over last decade (see the publications

of CERIA Lab and of myself):

SDDS Files under Windows

SD SQL Server

LH*RSP2P, specifically designed for

P2P systems (Google Talk )

SDDSs using Algebraic Signatures

SDDSs for Pattern Matching

AS-Index

SD-Rtrees

Secure SDDSs

LH*RE : LH*

with Recoverable Encryption Keys

Privacy of Outsourcing to the Cloud

for Readers Selected through Client-Side Encryption

Recoverable Encryption Through Noised Secret (2012)http://www.lamsade.dauphine.fr/~litwin/Recoverable

Encryption_10.pdf

Selected References

Introduction to SDDSs (ppt), (not revised for many years, sorry about, see my

class in French for the updated stuff).

SDDS-2000 : A Prototype System for Scalable Distributed Data

Structures on Windows 2000 (ppt). MS Research, Redmond & NWU, Chicago, 2001.

This talk introduced SDDSs to MS Research audience. As

usual, most folks in the room did not bother to read the papers published

before. The talk largely predated any popular industrial development we

discussed. I still wonder to what extent it influenced the future Azure Table

designers. I was invited by two old pals D. Lomet & Per-Ake

Larson. With D. Lomet we have proposed earlier the bounded disorder (BD) file

access method. See our publication list and the Web for the follow up work.

With Per-Ake we had long lasting coop over dynamic

and linear hash schemes.

On the souvenir side, after the talk I chatted with

Rick Rachid. He was in charge of MS Research

then. He noticed the SDDs potential.

But, he was rather focused on the prototype MS wristwatch. It is now called

generally a smartwatch. He proudly displayed a

Cartier-like he had. We accessed the MFST Nasdaq

quote somewhere. Well, I expressed him doubts about the bright future of the

invention, given the display size. Smart phones invasion (headed then by Nokia)

was about to begin and these were naturally much less limited on that side.

Vice versa, his nice comments, based on his experience of building his

distributed OS, did not seem sharing my

enthusiasm for the bright future of SDDSs, i.e., of the cloud infrastructure as

we say today. Well, today clouds are in use by billions as we all know (see

also below). MS got finally so convinced to clouds that their main fan there

got promoted CEO. Actually, even the name cloud was coined later by MS. Smart

watches attempt to make it as smart phone accessories. No news about MS smart

watches as yet, in Feb. 2016. Researcher life has sometimes amazing turns.

LH* : A Scalable

Distributed Data Structure (ACM-TODS) pdf

The paper expands earlier results, reported at Sigmod Conf. It presents especially two versions of LH*: with

and without so-called coordinator. The latter uses a circulating token instead.

It was never implemented beyond what our paper reports (work by J. Levy, during

internship at HPL). After reading the paper, compare LH* features to those of

DHTs (Distributed Hash Tables). Especially, compare the messaging cost of both

types of structures. The paper proposing DHTs should say something about. But

didn’t. Worse, it missed any reference to LH* work. This disregard for honest

scientific practice was surprising. At least, since it was coming from Berkeley

U. You might wish to look also at the LH* scan operation. It was never

implemented to our best knowledge. A likely reason was the lack of good

programming model. Like the popular MapReduce

model. Basic MapReduce

uses nevertheless a static hash for the data distribution. It’s well-known as

often inconvenient. Amazon’s Elastic Map/Reduce is closer to what LH* could

provide. Again, a direction for volunteers.

LH*RS a highly-available scalable distributed

data structure (ACM-TODS 2005)

Presents the prototype

designed by Rim Moussa (her Ph. D.) and the theory

basis. Builds up on earlier Ph.D. prototypes. One by

J. Karlson (Uppsala) proposing a particularly elegant

variant called LH*LH.

The other by F. Bennour, called SDDS-2002, built

specifically for Windows RAM files. See CERIA publication list (or the Web).

Notice that in last 30 years, only a handful of French DB research papers

passed the ACM-TODS publication standards. Rim’s prototype was also

demonstrated at VLDB in Toronto. Early results for LH*RS were reported also in

a Sigmod paper.

RP*

: A Family of Order Preserving Scalable Distributed Data Structures (VLDB-94) pdf

See Google’s landmark

paper (OSDI06) on Bigtable. You likely used that

without even noticing. As billions of others do every day, to get pages from

Google. Appreciate yourself the similarity/differences between the BigTable structure and RP* schemes in our paper. In the

same spirit, take a peek on Azure Table, Mongo DB, PNUTS and Hive.

Needless to hide

nevertheless our opinion that all these great products root in one of RP*

schemes, called RP*S in our paper. None of their documentation we’re aware of

refers to it however. Unlike the honest scientific practice requires. I asked

Google guys why, when I gave my LH*RSP2P Google Talk, in Google Tech Talks

Series, 2007). All but one did not respond. The nice one, who, by the way, is

by himself among top researchers there, said: sorry,

we did not spot the paper. But we’ll cite it from now on. We’re systems guys.

Do not follow up DB conferences. Well,

VLDB where the paper appeared is among main DB gatherings. DB researchers

besides, follow mainstream conferences outside the field, e.g. Usenix, Mascots...

So with all due respect, we do not fully subscribe to this excuse.

Especially that the no-cite practice became disgracefully widespread in the

last decade. Despite great tools like Google, (with its BigTable),

Scholar, DBLP, Bing… you name it. Commercial R & D world seems particularly

affected by the disease. But, the university research is no more exempt either.

As we already mentioned for DHTs.

High-Availability LH*

Schemes with Mirroring (COOPIS-96) pdf

As the title suggests, we proposed a fully replicated LH* scheme. Like

any such scheme, it is simple to implement. The drawback is the N*100% storage

cost for N replicas, as usual as well.

LH*S

: a High-availability and High-security Scalable Distributed Data

Structure (IEEE-RIDE-97) pdf

(Res. Rep. Version)

First in the series below, exploring parity codes for high-availability

SDDSs. Culminating with the LH*RS in ACM-TODS referred to above. The low

storage overhead makes such schemes potentially more attractive for a RAM

Cloud.

LH*g

: a High-availability Scalable Distributed Data Structure (CERIA & U. Linkoping Res. Rep. & IEEE TKDE Journal, Vol.

14, Issue: 4, 2002) pdf (Res. Rep. Version)

LH* Schemes with

Scalable Availability (IBM-Almaden Res. Rep.) pdf

Work patented by IBM. Republished in IEEE tutorial book

(J. Menon ed.). See the Web.

Design

Issues for Scalable Availability LH* Schemes with Record Grouping (DIMACS 99, Princeton U. 1999 & IBM-Almaden

Res. Rep.) Idem. pdf

Design Issues for

Scalable Availability LH* Schemes with Record Grouping (DIMACS 99, Princeton U. 1999,

Inv. Talk, Ms Powerpoint) ppt

LH*RS: A High-Availability Scalable Distributed Data Structure using Reed Solomon Codes pdf (CERIA Res. Rep. & ACM-SIGMOD 2000, Dallas).

Scalable Distributed Data Structures for

High-Performance Computing ppt (Aurora-2000,

1st Europ. Conf. on High. Perf. Comp., Vienna 2000, Inv. Talk, Ms

Powerpoint).

LH*RSP2P:

A Scalable Distributed Data Structure for P2P Environment. Conf. Intl. sur les NOuvelles TEchnologies de la REpartition

(NOTERE 2008), Lyon June 2008 (Keynote).

pdf.

This scheme is remarkable by its one message forwarding cost for the

worst case key-based addressing in an SDDS over a P2P cloud. That is, where every

node is both client and server. Like, e.g., seti@home

cloud. It is the least possible such cost for these clouds. We recall that LH*

in general is the only to provide the least such cost, of two messages, for any

SDDS over a client-server cloud, i.e., where all nodes are servers only. To

compare, the same cost for DHT is O (log N) for N-node cloud. The only “competitor” to LH*RSP2P is the

scheme proposed by B. Liskov & al. That one has

however in addition a specific thought more sporadic messaging cost to

propagate the file scalability.

The paper reports on initial results of Ph.D. research by Y. Hanafi.

See the Thesis & his journal paper for more.

An

Overview of a Scalable Distributed Database System SD-SQL Server. British Natl. Conf on Data Bases (BNCOD-06),

Dublin, Juillet 2006. Keynote.

This was 1st in the series devoted to SD-SQL Server: a scalable

distributed cloud DBMS. The system was the subject of the Ph.D. by S. Sahri. We

have presented SD-SQL Server twice to SQL Server R & D team at MS Redmond.

The talks seemed well-received (as well as at BNCOD). However, the transparent

table scale up of

SD-SQL Server still does not have the industrial counterpart (Feb. 2016). Neither in SQL Server nor in MS Azure SQL Database, nor elsewhere.

The popular manual approach instead, by so-called industrially sharding, e.g., in Azure, is comparatively in the Middle

Age.

Multidatabase Systems

MDBSs are systems for management of data in multiple

autonomous databases. Work on this concept was the other “leg” of my ACM Fellow

Award. We proposed it in 1980, within SIRIUS project at INRIA. The intention

was to generalize adequately the database model, i.e. the concept and

architecture of a DBS (Database Management System). Our “root” was the concept

of a multi(data)base defined as “DB set of DBs”. Quite

popular today, e.g., see the architecture of SQL Server and more at the

Web. Our first publication on the topic

was at the 2-nd ACM Annual Computer Exposition in Lafayette, LA. See our

publication list for the reference. To my happiness, the talk was well

received. Perhaps with the exception of the alligator that

run away when I stepped upon on the university garden pathway in the

dark. A major benefit was also the acquaintance with a dear friend since, Peter

Scheuermann, Prof. Emeritus, NWU.

First prototype MDBSs, named MRDSM and SYSIDORE were

designed at INRIA and presented at 1st Intl. Symp. on Distributed Databases in Paris, March 1980. One can still (June 09) see the short

description of MRDSM as one of pioneering CS tools at the Multics

site maintained at MIT.

A central capability of an MDBS is the multidatabase definition and manipulation language, e.g.

our MSQL for the relational data. This capability lacked to DBSs. Today,

research on the multidatabase systems is among most

popular topics. Most of DBSs silently became MDBSs, e.g., MsAccess,

SQL-Server, Sybase, Interbase, Oracle, MySQL, even

DB2 (the latest among the converted ones). Postgres

resisted up to now (Feb. 2016). The industrial multidatabase

capabilities are still rather limited as compared to those known at the

research level. See, e.g., the capabilities proposed by MSQL language. See our

journal papers on it or M. Rusinkiewicz & al

book. Many research issues remain open. Techniques for MDBSs apply also

generally to Meta-search engines on Internet. E.g., Rob's SearchEngineZ interfacing dozens of search engines. Or the MetaCrawler that searches Google,

Yahoo, Bing an others. Also - EDreams, Google

Shopping, Comparateur Obsèques i.e., Funeral Cost Comparator (I get this and

similar friendly spam daily since my retirement started) …

There is a very large literature on MDBSs. E.g., Google just displayed the estimate of 237K entries for my “multidatabase” keyword. If interested & French

understanding, start perhaps with my MDBS classes. Also see the Virtual Museum

above for notable grey literature, witnessing the early effort y its authors who should not get forgotten.

Today, the utility of multidatabase

systems is obvious. It wasn’t so, by far, when we started to talk about the

idea. The dominant trend was that of a single (integrated) DB. Possibly very large whenever needed. This was actually the

motivation for the name of the well-known VLDB conference series. Also, there

was a paper by Tom Steel, I believe, conjecturing the construction of a DBMS

managing worldwide data as a (single) DB in a couple of years. Besides, we had

heat discussions at DB conferences. To illustrate how dominant the single DB

idea was, to aim at by DBMS designers, let me recall one anecdote. I was on a plane to Cannes with one of the DB

arena friends now, to attend VLDB-81 there. He was already and still is, among

top researches in our field. When I asked for opinion about the idea, his

response was straight: “Why should we bother about managing multiple DBs if we

can manage only one”. That was the end

of the discussion, for the flight and until today.

4.Other Research

Our research in 70-80-ties on virtual and especially

linear hashing is sufficiently known to not be further recalled. Perhaps,

except for an anecdote. Our 1st paper ever on it was a Note in highly

prestigious “Comptes Rendus”

of French Académie des Sciènces.

To my stunning surprise, the Académie changed my

original wording “Hash-Coding” in the title into “Codage

Découpé”. Everyone concerned in France knew the

meaning of the former, as there was no official French substitute. In contrast,

no one knew the latter. The Académie just coined it

because of my Note, in their role of the Guardian of Purity of French language.

To make the link, they left however the “hash-coding” in the abstract. I

wondered why they did not use therefore the existing, although not yet used for

the computers, word “hachage”. The response was: no

concept of coding in that one. Anyhow, the story seems unique & I’ve never

ever seen the term “codage découpé”

applied since.

Now, let me recall some topics I also investigated

40-30 years ago. I believe they merit more attention or at least citations

they’ve got since.

Structural

Numbers for Graphs & Electronic Circuits pdf

The concept was proposed as algebra for formal analysis of electrical circuit to Chinese Academy of Sciences by K.T. Wang in 1934. Had some follow up there. That dried out during the Chinese tumult. The topic got rediscovered in 1950 in US by R.J. Duffin and followed up by a few, especially S. Bellert in 1962. Who became in 1969 my Magister Eng. Thesis advisor at Warsaw Polytechnic School. I continued the research at INRIA, ending up with the above pointed to INRIA Scientific Note 7 in 1972.

These were nicely edited, highly selective publications,

at most one per month. The caveat was they had to be in French.

A structural number (SN) basically represents a graph. Any graph,

not only an electrical circuit. Algebraic operations operate upon, in a

particularly simple way. Enumerate all the circuits, trees, merge/cut edges…

The Note clarifies some aspects of SNs definition and manipulation, especially

for, so-called, generalized SNs. The latter apply to hypergraphs.

It also proposes the first to my knowledge implementation of the algebraic operations

on NSs, extending to hypergraphs as well. We apply

our method to generate electric circuit parameter formulae, especially for

impedance. The Thesis described also my prototype. Not the Note. The prototype

was indeed already little outdated. In particular, it was still stored on IBM

punched cards.

The graph manipulations characterize many domains. Our results were

a tip of an iceberg, aiming at our specific application. Nevertheless, they

were also a generic graph management tool. As such, SNs seemed competitive to

the usual stuff, at least. Despite this potential, about nothing happened

since. I could not spot any citation. One likely reason: the Note is invisible

to main search engines. I guess publishing in French did not help. Also, the

keyword “structural number” seems highly popular with Civil Engineering in a

different sense. These references appear naturally first.

More generally, I could spot only a handful of related papers. One was a 1972 article from India on active electrical circuits. Then, a couple of Polish papers appeared in 80-90s. The authors applied manually the SNs to the mechanical engineering. So, a perspective of interesting work apparently still remains.

Digital

Processing of Phonocardiograms

Phonocardiogram (PCG) is the recording of heart sound

(murmur). The manual signal interpretation by the physician may diagnose grave

illnesses. Like for ECG. However, the former seems less popular then the

latter. Probably since the manual processing is lengthily. My goal was to help

it through digital filtering & automatic diagnosis. Using what was then

called pattern recognition & now machine learning, usually.

My work

constituted the Doctorat en Informatique in

INRIA. It was published later in renowned Medical Informatics journals (search

the publication list pointed to below). I demonstrated the prototype validating

both goals. The signal processing introduced to PCG processing the

interactive vocoding and FFT-based digital

filtering (FFT means Fast Fourier Transform, we recall). The automatic

classification through clustering used Benzecri’s factorial

analysis. I applied the apparatus to two dangerous heart anomalies: aortic valvular stenosis and subaortic idiopathic stenosis. The diagnosis took a

split of a second, instead of hours of strain to the physician eyes.

My English paper has 3 citations at Scholar. One of

those is also at MS Academic. I thought this result regretfully showing about

no interest in the field. I was dead wrong. While doing this update, found dozens

of follow ups. Including, US, Japan, an entire book & a 1995 survey at NIH

site, cited itself over 200 times. The effort even included some French work

not far from Paris around 2012. All folks continue along the path of vocoding & FFT filtering towards some

classification algorithm. Why all but three avoided then citing us? Feel free

to question the authors. In the meantime, the rules of scientific

honesty we’re raised with seem again the past.

Still, it did not seem that the bulk made it into the commercial

world. Perhaps the current interest in Internet of Things will help, e.g., with

distant patient monitoring. On anecdotic side, in early 80ties I got a letter

from HP. Kindly informing me about a brand new interactive FFT-based signal

filtering & analysis hardware & software box. The letter was allegedly

informing me given my work in the domain. This was no more for over ten years.

I understood HP somehow acknowledged the parenthood. Anyhow, perhaps

HP still sells the babies. Clustering and more data analysis would be a useful

addition if not done already.

Value Dates for Concurrency Control

A value date is the concept loved by banks, in routine

use. It is the time when a transaction must commits, neither earlier nor later.

For instance, your check deposit through an ATM becomes usually valid precisely

at 6 pm same business day. That is the time when the credit shows up at your

account and you can start spending it. The calculus of the interest the deposit

perhaps brings starts at the value date as well.

Our papers on this topic adapted the concept to the DB

transaction management. It looked particularly attractive for large distributed

ones. Including those reaching planetary scale, e.g., in Google’s Spanner. As for

banks, the value date was giving a predictable time for every sub-transaction

to end up and even to commit synchronously although in isolation. The mechanism

we still believe better than the popular eventual consistency, without any such

limit. Here is one of our later papers about, from 2001. We shamefully forgot

where it was published (pdf).

The value date idea caught attention of DB research and had a follow up. Not enough for the concept to enter the DB practice, to our knowledge. We believe, it still might and should. One reason was perhaps confusion with so called real-time transactions, proposed by our old pal Hector Garcia Molina & al. Hector unfortunately passed away already. That transaction also had a time limit, but was also obviously wished to commit at earliest. We had the pleasure to match both ideas as early as in 1988, when Hector invited me for the talk at Princeton. It seems a number of folks working later on transactions, missed this important difference.

For anecdote, I had a chance to have outstanding

co-authors. My gentle initial, H. Tirri, became later

the boss of Nokia Research, Palo Alto. Another friend, Y. Breitbart,

also ACM Fellow, regretfully already passed away as well. Finally, Avi Silberschatz, Prof. at Yale, when happens to be back from

Israel, does not need any introduction.

Computer Life

for Internet.

This work had for goal future organization of Internet. The proposal was to model it upon our own. The result would be an Internet of Beings, in modern wording. Beings should have their own life, could ultimately be aware of their existence and perhaps even imagine ours. We could appear them as God(s). Amazingly, the construction was recursive. Ourselves, we could be Beings in computers of our God(s) and so on.

One of the papers became an invited talk at Usenix. Another - became keynote for InfoJapan

90 (Information Technology Harmonizing With Society).

The latter conference had another keynote. That is how I made acquaintance with

Steve Jobs. His exciting talk was about NEXT. Soon after, NEXT was however a

thing of the past.

I met an unexpected number of colleagues who liked it.

The idea had thus a nice follow up. In turn, I liked the cooperation with the

coauthors. Nick Roussopoulos became since an ACM

Fellow. Gio Wiederhold

retired from Stanford, but only officially. Passed away unfortunately beginning

2023. Not surprisingly, the practical side of this research is yet to come. The

astonishing part was that I could not find citations of the InfoJapan

paper. Only the initial UMIACS Research Report got up to date nine citations on

Scholar. It is unfortunately the only visible at the Web. I manage to locate

the InfoJapan Proceedings only off-line in some US

University libraries and the Congress one. Amazon Student offers the book for

incredible price of $500 (Feb. 2016). All text in the book is under the copyrights.

I guess all this explains that. I found recently my text for InfoJapan prepared for the reviewers, who accepted it

provided some improvements. Here is at least the former one (pdf).

Amazingly, the 1st level of our model recursivity, i.e., that we are perhaps ourselves in some computer(s), appeared serious to astronomers. See there and elsewhere on the Web. Understandably, the proposers do not seem aware of our work. All things considered, again I believe that a lot of interesting work still lies ahead.

Relations with Inherited

Attributes

Codd’s relational model has two types of relations. A base relation with the stored attributes only. A view has only the inherited ones, from some base relations or views. This dichotomy may appear awkward. A single concept of a relation with stored and/or inherited attributes suffices. The popular wisdom says indeed: who/what can more, can less.

We made this proposal and analyzed some consequences for an RDB definition and manipulation, in this 1992 HPL Report (pdf